Yesterday’s terror attack in Barcelona is yet another reminder that the war against terrorist organizations like ISIS is far from over. But this war is also being waged on the digital front.

The strong links between terrorism and social media are nothing new. ISIS uses Twitter and Facebook accounts to recruit new people for their army. Islamists use YouTube to show beheadings and messages about their so-called caliphate. To coordinate attacks, ISIS terrorists used an app called Telegram Messenger. There is even a newsletter designed to share the ideas of the organization.

This week’s attack in Barcelona was quickly claimed by ISIS on their social media channels and their own news agency, Amaq. (ISIS social media accounts celebrate Barcelona attack).

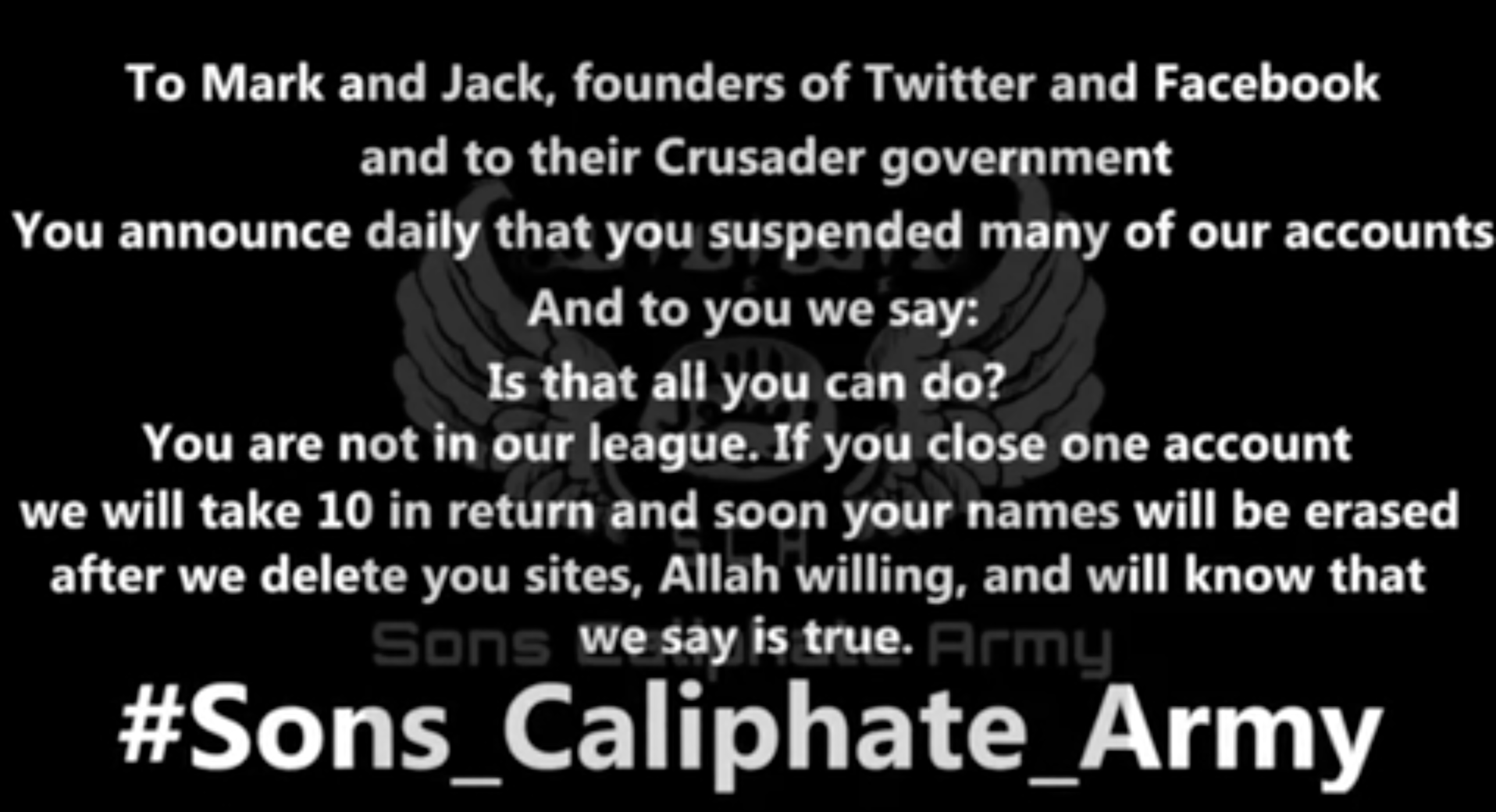

Source: Isis video targets Twitter and Facebook CEOs over suspended accounts

Social media is so important for ISIS, that in a 2015 interview with Washington Post ( Inside the surreal world of ISIS propaganda) a defector from ISIS said: “The media people are more important than the soldiers. Their monthly income is higher. They have better cars. They have the power to encourage those inside to fight and the power to bring more recruits to the Islamic State.” The organization realized the potential of social media and even built their own social media internal online network, according to Europol: ISIS created its own social network to spread propaganda.

Realising how important social media is for ISIS, tech giants and governments have been trying to fight an online war against this organization by joining forces and eliminating content.

According to a Forbes article last June, Facebook, Microsoft, Youtube and Twitter are now working together to fight online terrorism. The four companies are creating a common database of all the online content they removed from the web: Facebook, Microsoft, Twitter And YouTube Collaborate To Fight Terrorism.

Facebook is concentrating a lot of effort in eliminating harmful content online. The company has hired 3,000 people for just this purpose. According to a Guardian article, Facebook used to rely on users flagging the dangerous content online, but as that’s not happening to a sufficient degree, Facebook adopted the more proactive approach of flagging that content themselves: Facebook is hiring moderators. But is the job too gruesome to handle?

However, this is not an easy task as the amount of data published online is insane: 79,000 posts are added to Facebook and one hour of video is published on YouTube every second.

YouTube and Facebook are using Artificial intelligence (AI) to help their teams address the problem of terrorism on their platforms. The Facebook AI team trains algorithms to identify extremist pictures and language and to close accounts linked to content related to terrorism. In the words of Brian Fishman, counterterrorism policy manager: “When someone tries to upload a terrorist photo or video, our systems look for whether the image matches a known terrorism photo or video… we can work to prevent other accounts from uploading the same video to our site.” (Facebook using artificial intelligence combat terrorist propaganda).

The results look promising: the technology allows big techs to quickly eliminate harmful content online. However, these projects are still in an initial phase and require human supervision because the system can only “remove the black-and-white cases,” as Brian Fishman pointed out (Facebook wants to use artificial intelligence to block terrorists online) and if it wants to tackle more complex cases, the AI technology has a long way to go.

The results look promising: the technology allows big techs to quickly eliminate harmful content online. However, these projects are still in an initial phase and require human supervision because the system can only “remove the black-and-white cases,” as Brian Fishman pointed out (Facebook wants to use artificial intelligence to block terrorists online) and if it wants to tackle more complex cases, the AI technology has a long way to go.

Meanwhile social media continues to play a significant role in helping extremist ideologies find an eager audience. Further initiatives will be needed if we want to stop extremists. But this situation is definitely beyond the ability of the tech platforms to deal with – users will need to play their part, by reporting all posts that promote extremism, incite violence or encourage irresponsible behavior. And should governments play a larger role? In Germany social media platforms are being fined if they are not addressing hate speech. What do you think?